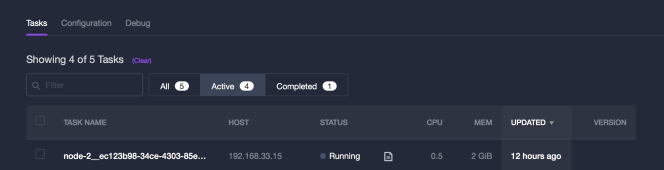

In the blog post Install dev environment for Kafka, Nodejs and node-red I described to install a kafka – webstorm environment on a virtual machine. For a productive use, we need high availability kafka installation. The idea is to use an orchestration framework like DCOS. It is based on the documentation of mesosphere https://docs.mesosphere.com/1.8/usage/tutorials/iot_pipeline/.

Author Archives: matthiasfuchs2014

Install dev environment for Kafka, Nodejs and node-red

To prepare a nodejs docker image with a javascript application for DCOS I need a small development environment. I plan to install on a linux based system kafka, nodejs and node-red. The idea is, to have a small environment to develop a web interface for websocket connections.

Install Cloud Control 13.2 on Mac and Virtual Box

I started installation of Cloud Control as described in the oracle documentation. My hardware and software environment was:

Oracle Virtual Box 5.0.x, 5.1.X

MacBook Pro 11,4

Processor: 2.2 GHz Intel Core i7

I tested it with CentOs 7.2 and 6.8, always the same problem.

Continue reading

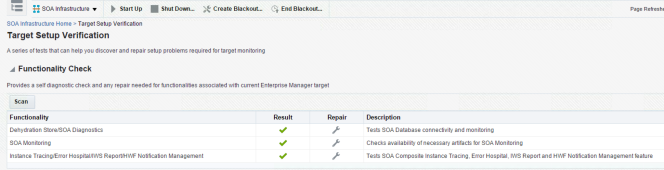

Cloud Control 13.2 and SOA Suite 12.2.1.1

The intention is to monitor a SOA/BPM Cluster installation with 2 managed servers on two hosts with Cloud Control 13.2. There is a different user for weblogic and cloud control agent.

First step is to install the agents on 2 servers. Each server has one managed server. The easiest installation for agents is to install it with AgentPull Scripts.

Here is a short script, for further information about agent install see oracle documentation.

Installing DCOS – Part2

In the first blog about installing DCOS, I described the normal procedure to install it with centos base image. The are som issues:

- overlay file system does not work – docker

- small vm file system

- Can’t start several apps fom dcos universe

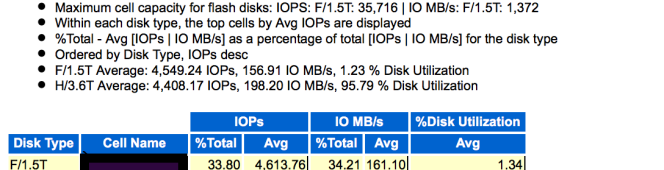

how oracle exadata write-back flash cache (wbfc) turns your life

Write-Back flash cache is a feature of oracle Exadata since version 11.2.3.2 and is available since 2012. By default it’s turned off. Do I need wbfc?

specifications

When you look at performance benchmark of oracle Exadata disk performance it decrease from 10.800 IOPS (X2-2 Quarter, HP Disks) to 7.800 IOPS (X6-2 Quarter, HC Disks). The size increased from 600GB to 8TB. If you want to write random IO to X6 without write-back flash cache it can be a nightmare in opposite to a X2-2 machine.

Installing DCOS with your own Virtualbox VMs

To install dcos with virtual box is easy. Follow the instructions on https://github.com/dcos/dcos-vagrant/tree/v0.6.0 and you are finished in short time.

In this guide I want to show to install DCOS with you own virtual machines like an on promise install.

Continue reading

ACFS Replication between two Exadata’s

In this blog I describe the configuration of ACFS between two Exadata. ACFS is a cluster file system based on Oracle ASM (Automatic Storage Management). It is available at Oracle Database Cluster with ASM and also on Exadata installations. I found some documentation, but only few details are available. In my blog I added information about monitoring and it is optimized for use with Exadata. In my blog the installation is based on X5 Exadata hardware and Oracle 12C (12.1.0.2) grid and database software.

Continue reading

DOAG Conference Presentations 2015

Join the presentation at German Oracle User Group Conference 2015 in Nuremberg. My focus is on Hadoop with Oracle Database.

Hadoop – Eine Erweiterung für die Oracle DB?

17.11 – 16:00 Uhr Kiew

Aufbau eines Semantic Layers zwischen DB und Hadoop

18.11 – 11:00 Uhr Helsinki

Hope to see you there!

Data Transformation in Big Data Appliance or Oracle Exadata – a comparison

In a data ware house environment new data is often read out of csv files. This csv files are connected as an external table to the database. In this scenario you need a high available file system, where the text files are stored. The idea is now to use hdfs – hadoop file system – as a high available, high-performance filesystem. Hdfs is than an high performance, high available filesystem and in additional a database where first transformation can be executed without stress the main database.

In the blog I show the way to integrate this data in the data ware house and perform some easy transformations like generate keys. I use BigDataSQL external tables to connect. On the one hand I create everything in the data base on the other hand I create the keys in an Hive/hadoop environment. The keys are based on Md5 hashes as described in the last blog.

Continue reading